Preface

The rapid pace of innovation in generative AI promises to change how we live and work, but it’s getting increasingly difficult to keep up. The number of [AI papers published on arXiv is growing exponentially](https://oreil.ly/EN5ay), [Stable Diffusion](https://oreil.ly/QX-yy) has been among the fastest growing open source projects in history, and AI art tool [Midjourney’s Discord server](https://oreil.ly/ZVZ5o) has tens of millions of members, surpassing even the largest gaming communities. What most captured the public’s imagination was OpenAI’s release of ChatGPT, [which reached 100 million users in two months](https://oreil.ly/FbYWk), making it the fastest-growing consumer app in history. Learning to work with AI has quickly become one of the most in-demand skills.

生成式人工智能的快速创新有望改变我们的生活和工作方式,但跟上它变得越来越困难。 arXiv 上发表的 AI 论文数量呈指数级增长,Stable Diffusion 已成为历史上增长最快的开源项目之一,AI 艺术工具 Midjourney 的 Discord 服务器拥有数千万会员,甚至超过了最大的游戏社区。最激发公众想象力的是OpenAI发布的ChatGPT,两个月内用户数量就达到1亿,成为历史上增长最快的消费类应用程序。学习使用人工智能已迅速成为最受欢迎的技能之一。

Everyone using AI professionally quickly learns that the quality of the output depends heavily on what you provide as input. The discipline of prompt engineering has arisen as a set of best practices for improving the reliability, efficiency, and accuracy of AI models. “In ten years, half of the world’s jobs will be in prompt engineering,” claims Robin Li, the cofounder and CEO of Chinese tech giant Baidu. However, we expect prompting to be a skill required of many jobs, akin to proficiency in Microsoft Excel, rather than a popular job title in itself. This new wave of disruption is changing everything we thought we knew about computers. We’re used to writing algorithms that return the same result every time—not so for AI, where the responses are non-deterministic. Cost and latency are real factors again, after decades of Moore’s law making us complacent in expecting real-time computation at negligible cost. The biggest hurdle is the tendency of these models to confidently make things up, dubbed hallucination, causing us to rethink the way we evaluate the accuracy of our work.

每个专业使用人工智能的人都会很快了解到,输出的质量在很大程度上取决于您提供的输入内容。即时工程学科作为一套提高人工智能模型可靠性、效率和准确性的最佳实践而出现。中国科技巨头百度联合创始人兼首席执行官李彦宏表示:“十年内,世界上一半的工作岗位将来自即时工程。”然而,我们预计提示将成为许多工作所需的一项技能,类似于熟练掌握 Microsoft Excel,而不是其本身是一个流行的职位名称。这波新的颠覆浪潮正在改变我们对计算机的一切认识。我们习惯于编写每次返回相同结果的算法,但对于人工智能来说却并非如此,因为人工智能的响应是不确定的。几十年来,摩尔定律让我们沾沾自喜地期望以可忽略不计的成本进行实时计算,成本和延迟再次成为真正的因素。最大的障碍是这些模型倾向于自信地编造事实,这被称为幻觉,导致我们重新思考评估工作准确性的方式。

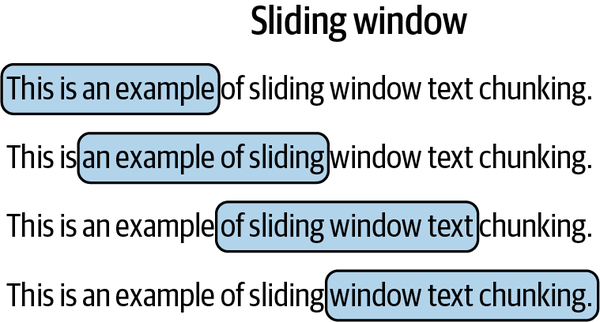

We’ve been working with generative AI since the GPT-3 beta in 2020, and as we saw the models progress, many early prompting tricks and hacks became no longer necessary. Over time a consistent set of principles emerged that were still useful with the newer models, and worked across both text and image generation. We have written this book based on these timeless principles, helping you learn transferable skills that will continue to be useful no matter what happens with AI over the next five years. The key to working with AI isn’t “figuring out how to hack the prompt by adding one magic word to the end that changes everything else,” as OpenAI cofounder Sam Altman asserts, but what will always matter is the “quality of ideas and the understanding of what you want.” While we don’t know if we’ll call it “prompt engineering” in five years, working effectively with generative AI will only become more important.

自 2020 年 GPT-3 测试版以来,我们一直在研究生成式人工智能,随着我们看到模型的进步,许多早期的提示技巧和技巧变得不再必要。随着时间的推移,出现了一套一致的原则,这些原则对于新模型仍然有用,并且适用于文本和图像生成。我们根据这些永恒的原则编写了这本书,帮助您学习可转移的技能,无论未来五年人工智能发生什么,这些技能都将继续有用。正如 OpenAI 联合创始人萨姆·奥尔特曼 (Sam Altman) 所言,使用人工智能的关键并不在于“弄清楚如何通过在末尾添加一个神奇的单词来改变其他一切来破解提示”,但永远重要的是“想法的质量和理解你想要什么。”虽然我们不知道五年后是否会称之为“即时工程”,但有效地使用生成式人工智能只会变得更加重要。

Software Requirements for This Book

本书的软件要求

All of the code in this book is in Python and was designed to be run in a Jupyter Notebook or Google Colab notebook. The concepts taught in the book are transferable to JavaScript or any other coding language if preferred, though the primary focus of this book is on prompting techniques rather than traditional coding skills. The code can all be found on GitHub, and we will link to the relevant notebooks throughout. It’s highly recommended that you utilize the GitHub repository and run the provided examples while reading the book.

本书中的所有代码均采用 Python 编写,旨在在 Jupyter Notebook 或 Google Colab Notebook 中运行。书中教授的概念可以转移到 JavaScript 或任何其他编码语言(如果愿意),尽管本书的主要重点是提示技术而不是传统的编码技能。代码都可以在 GitHub 上找到,我们将在全文中链接到相关笔记本。强烈建议您在阅读本书时使用 GitHub 存储库并运行提供的示例。

For non-notebook examples, you can run the script with the format python content/chapter_x/script.py in your terminal, where x is the chapter number and script.py is the name of the script. In some instances, API keys need to be set as environment variables, and we will make that clear. The packages used update frequently, so install our requirements.txt in a virtual environment before running code examples.

对于非笔记本示例,您可以在终端中运行格式为 python content/chapter_x/script.py 的脚本,其中 x 是章节编号, script.py 是章节名称脚本。在某些情况下,API 密钥需要设置为环境变量,我们将明确这一点。使用的软件包经常更新,因此在运行代码示例之前在虚拟环境中安装我们的requirements.txt。

The requirements.txt file is generated for Python 3.9. If you want to use a different version of Python, you can generate a new requirements.txt from this requirements.in file found within the GitHub repository, by running these commands:

requests.txt 文件是为 Python 3.9 生成的。如果您想使用不同版本的 Python,可以通过运行以下命令从 GitHub 存储库中找到的requirements.in 文件生成新的requirements.txt:

`pip install pip-tools`

`pip-compile requirements.in`

For Mac users: 对于 Mac 用户:

Open Terminal: You can find the Terminal application in your Applications folder, under Utilities, or use Spotlight to search for it.

打开终端:您可以在“应用程序”文件夹中的“实用程序”下找到终端应用程序,或使用 Spotlight 进行搜索。Navigate to your project folder: Use the

cdcommand to change the directory to your project folder. For example:cd path/to/your/project.

导航到您的项目文件夹:使用cd命令将目录更改为您的项目文件夹。例如:cd path/to/your/project。Create the virtual environment: Use the following command to create a virtual environment named

venv(you can name it anything):python3 -m venv venv.

创建虚拟环境:使用以下命令创建名为venv的虚拟环境(您可以将其命名为任何名称):python3 -m venv venv。Activate the virtual environment: Before you install packages, you need to activate the virtual environment. Do this with the command

source venv/bin/activate.

激活虚拟环境:在安装软件包之前,您需要激活虚拟环境。使用命令source venv/bin/activate执行此操作。Install packages: Now that your virtual environment is active, you can install packages using

pip. To install packages from the requirements.txt file, usepip install -r requirements.txt.

安装软件包:现在您的虚拟环境已激活,您可以使用pip安装软件包。要从requirements.txt 文件安装软件包,请使用pip install -r requirements.txt。Deactivate virtual environment: When you’re done, you can deactivate the virtual environment by typing

deactivate.

停用虚拟环境:完成后,您可以通过键入deactivate来停用虚拟环境。

For Windows users: 对于 Windows 用户:

Open Command Prompt: You can search for

cmdin the Start menu.

打开命令提示符:您可以在“开始”菜单中搜索cmd。Navigate to your project folder: Use the

cdcommand to change the directory to your project folder. For example:cd path\to\your\project.

导航到您的项目文件夹:使用cd命令将目录更改为您的项目文件夹。例如:cd path\to\your\project。Create the virtual environment: Use the following command to create a virtual environment named

venv:python -m venv venv.

创建虚拟环境:使用以下命令创建名为venv的虚拟环境:python -m venv venv。Activate the virtual environment: To activate the virtual environment on Windows, use

.\venv\Scripts\activate.

激活虚拟环境:要在 Windows 上激活虚拟环境,请使用.\venv\Scripts\activate。Install packages: With the virtual environment active, install the required packages:

pip install -r requirements.txt.

安装软件包:在虚拟环境处于活动状态的情况下,安装所需的软件包:pip install -r requirements.txt。Deactivate the virtual environment: To exit the virtual environment, simply type:

deactivate.

停用虚拟环境:要退出虚拟环境,只需键入:deactivate。

Here are some additional tips on setup:

以下是有关设置的一些附加提示:

Always ensure your Python is up-to-date to avoid compatibility issues.

始终确保您的 Python 是最新的以避免兼容性问题。Remember to activate your virtual environment whenever you work on the project.

每当您处理项目时,请记住激活您的虚拟环境。The requirements.txt file should be in the same directory where you create your virtual environment, or you should specify the path to it when using

pip install -r.

requirements.txt 文件应该位于您创建虚拟环境的同一目录中,或者您应该在使用pip install -r时指定它的路径。

Access to an OpenAI developer account is assumed, as your OPENAI_API_KEY must be set as an environment variable in any examples importing the OpenAI library, for which we use version 1.0. Quick-start instructions for setting up your development environment can be found in OpenAI’s documentation on their website.

假设可以访问 OpenAI 开发者帐户,因为在导入 OpenAI 库的任何示例中,您的 OPENAI_API_KEY 必须设置为环境变量,我们使用版本 1.0。有关设置开发环境的快速入门说明,请参阅 OpenAI 网站上的文档。

You must also ensure that billing is enabled on your OpenAI account and that a valid payment method is attached to run some of the code within the book. The examples in the book use GPT-4 where not stated, though we do briefly cover Anthropic’s competing Claude 3 model, as well as Meta’s open source Llama 3 and Google Gemini.

您还必须确保您的 OpenAI 帐户启用了计费功能,并且附加了有效的付款方式来运行书中的某些代码。书中的示例使用 GPT-4(未说明),但我们确实简要介绍了 Anthropic 的竞争 Claude 3 模型,以及 Meta 的开源 Llama 3 和 Google Gemini。

For image generation we use Midjourney, for which you need a Discord account to sign up, though these principles apply equally to DALL-E 3 (available with a ChatGPT Plus subscription or via the API) or Stable Diffusion (available as an API or it can run locally on your computer if it has a GPU). The image generation examples in this book use Midjourney v6, Stable Diffusion v1.5 (as many extensions are still only compatible with this version), or Stable Diffusion XL, and we specify the differences when this is important.

对于图像生成,我们使用 Midjourney,您需要注册一个 Discord 帐户,尽管这些原则同样适用于 DALL-E 3(通过 ChatGPT Plus 订阅或通过 API 提供)或 Stable Diffusion(作为 API 提供或它)如果您的计算机有 GPU,则可以在本地运行)。本书中的图像生成示例使用 Midjourney v6、Stable Diffusion v1.5(因为许多扩展仍然只与此版本兼容)或 Stable Diffusion XL,并且当这很重要时我们会指定差异。

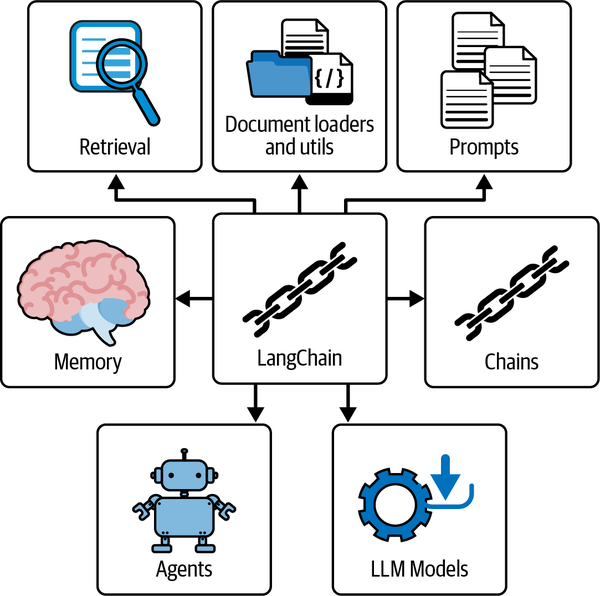

We provide examples using open source libraries wherever possible, though we do include commercial vendors where appropriate—for example, Chapter 5 on vector databases demonstrates both FAISS (an open source library) and Pinecone (a paid vendor). The examples demonstrated in the book should be easily modifiable for alternative models and vendors, and the skills taught are transferable. Chapter 4 on advanced text generation is focused on the LLM framework LangChain, and Chapter 9 on advanced image generation is built on AUTOMATIC1111’s open source Stable Diffusion Web UI.

我们尽可能提供使用开源库的示例,尽管我们确实在适当的情况下包含了商业供应商,例如,关于矢量数据库的第 5 章演示了 FAISS(开源库)和 Pinecone(付费供应商)。书中演示的示例应该可以轻松修改以适应替代模型和供应商,并且所教授的技能是可以转移的。第 4 章关于高级文本生成的重点是 LLM 框架 LangChain,第 9 章关于高级图像生成的内容基于 AUTOMATIC1111 的开源 Stable Diffusion Web UI。

Conventions Used in This Book

本书中使用的约定

The following typographical conventions are used in this book:

本书使用以下印刷约定:

Italic 斜体

Indicates new terms, URLs, email addresses, filenames, and file extensions.

表示新术语、URL、电子邮件地址、文件名和文件扩展名。

Constant width

Used for program listings, as well as within paragraphs to refer to program elements such as variable or function names, databases, data types, environment variables, statements, and keywords.

用于程序列表,以及在段落中引用程序元素,例如变量或函数名称、数据库、数据类型、环境变量、语句和关键字。

Constant width bold

Shows commands or other text that should be typed literally by the user.

显示应由用户逐字键入的命令或其他文本。

Constant width italic

Shows text that should be replaced with user-supplied values or by values determined by context.

显示应替换为用户提供的值或上下文确定的值的文本。

TIP

This element signifies a tip or suggestion.

该元素表示提示或建议。

NOTE 笔记

This element signifies a general note.

该元素表示一般注释。

WARNING 警告

This element indicates a warning or caution.

该元素表示警告或警告。

Throughout the book we reinforce what we call the Five Principles of Prompting, identifying which principle is most applicable to the example at hand. You may want to refer to Chapter 1, which describes the principles in detail.

在整本书中,我们强化了所谓的“提示五项原则”,确定哪项原则最适用于当前的示例。您可能需要参考第 1 章,其中详细描述了这些原则。

PRINCIPLE NAME 原理名称

This will explain how the principle is applied to the current example or section of text.

这将解释如何将该原理应用于当前的示例或文本部分。

Using Code Examples 使用代码示例

Supplemental material (code examples, exercises, etc.) is available for download at https://oreil.ly/prompt-engineering-for-generative-ai.

补充材料(代码示例、练习等)可在 https://oreil.ly/prompt-engineering-for-generative-ai 下载。

If you have a technical question or a problem using the code examples, please send email to bookquestions@oreilly.com.

如果您有技术问题或使用代码示例时遇到问题,请发送电子邮件至 bookquestions@oreilly.com。

This book is here to help you get your job done. In general, if example code is offered with this book, you may use it in your programs and documentation. You do not need to contact us for permission unless you’re reproducing a significant portion of the code. For example, writing a program that uses several chunks of code from this book does not require permission. Selling or distributing examples from O’Reilly books does require permission. Answering a question by citing this book and quoting example code does not require permission. Incorporating a significant amount of example code from this book into your product’s documentation does require permission.

本书旨在帮助您完成工作。一般来说,如果本书提供了示例代码,您就可以在您的程序和文档中使用它。除非您要复制大部分代码,否则您无需联系我们以获得许可。例如,使用本书中的几段代码编写一个程序不需要许可。销售或分发 O’Reilly 书籍中的示例确实需要许可。通过引用本书和示例代码来回答问题不需要许可。将本书中的大量示例代码合并到您的产品文档中确实需要许可。

We appreciate, but generally do not require, attribution. An attribution usually includes the title, author, publisher, and ISBN. For example: “Prompt Engineering for Generative AI by James Phoenix and Mike Taylor (O’Reilly). Copyright 2024 Saxifrage, LLC and Just Understanding Data LTD, 978-1-098-15343-4.”

我们赞赏但通常不要求归属。归属通常包括标题、作者、出版商和 ISBN。例如:“James Phoenix 和 Mike Taylor (O’Reilly) 的《生成式 AI 快速工程》。版权所有 2024 Saxifrage, LLC 和 Just Understanding Data LTD,978-1-098-15343-4。”

If you feel your use of code examples falls outside fair use or the permission given above, feel free to contact us at permissions@oreilly.com.

如果您认为您对代码示例的使用不符合合理使用或上述许可的范围,请随时通过permissions@oreilly.com 与我们联系。

O’Reilly Online Learning

奥莱利在线学习

NOTE 笔记

For more than 40 years, O’Reilly Media has provided technology and business training, knowledge, and insight to help companies succeed.

40 多年来,O’Reilly Media 一直提供技术和业务培训、知识和见解来帮助公司取得成功。

Our unique network of experts and innovators share their knowledge and expertise through books, articles, and our online learning platform. O’Reilly’s online learning platform gives you on-demand access to live training courses, in-depth learning paths, interactive coding environments, and a vast collection of text and video from O’Reilly and 200+ other publishers. For more information, visit https://oreilly.com.

我们独特的专家和创新者网络通过书籍、文章和我们的在线学习平台分享他们的知识和专业知识。 O’Reilly 的在线学习平台让您可以按需访问实时培训课程、深入学习路径、交互式编码环境以及来自 O’Reilly 和 200 多家其他出版商的大量文本和视频。欲了解更多信息,请访问 https://oreilly.com。

How to Contact Us

如何联系我们

Please address comments and questions concerning this book to the publisher:

请向出版商提出有关本书的意见和问题:

- O’Reilly Media, Inc. 奥莱利媒体公司

- 1005 Gravenstein Highway North

格雷文斯坦公路北1005号 - Sebastopol, CA 95472 塞瓦斯托波尔, CA 95472

- 800-889-8969 (in the United States or Canada)

800-889-8969(美国或加拿大) - 707-827-7019 (international or local)

707-827-7019(国际或本地) - 707-829-0104 (fax) 707-829-0104(传真)

- support@oreilly.com https://www.oreilly.com/about/contact.html We have a web page for this book, where we list errata, examples, and any additional information. You can access this page at https://oreil.ly/prompt-engineering-generativeAI. 我们有本书的网页,其中列出了勘误表、示例和任何其他信息。您可以通过 https://oreil.ly/prompt-engineering-generativeAI 访问此页面。

For news and information about our books and courses, visit https://oreilly.com. 有关我们的书籍和课程的新闻和信息,请访问 https://oreilly.com。

Find us on LinkedIn: https://linkedin.com/company/oreilly-media. 在 LinkedIn 上找到我们:https://linkedin.com/company/oreilly-media。

Watch us on YouTube: https://youtube.com/oreillymedia. 在 YouTube 上观看我们的视频:https://youtube.com/oreillymedia。

Acknowledgments 致谢 We’d like to thank the following people for their contribution in conducting a technical review of the book and their patience in correcting a fast-moving target: 我们要感谢以下人员对本书进行技术审查所做的贡献以及他们在纠正快速变化的目标方面的耐心:

Mayo Oshin, early LangChain contributor and founder at SeinnAI Analytics Mayo Oshin,LangChain 早期贡献者和 SeinnAI Analytics 创始人

Ellis Crosby, founder at Scarlett Panda and AI agency Incremen.to 埃利斯·克罗斯比 (Ellis Crosby),Scarlett Panda 和人工智能机构 Incremen.to 的创始人

Dave Pawson, O’Reilly author of XSL-FO Dave Pawson,O’Reilly XSL-FO 的作者

Mark Phoenix, a senior software engineer 马克·菲尼克斯 (Mark Phoenix),高级软件工程师

Aditya Goel, GenAI consultant Aditya Goel,GenAI 顾问

We are also grateful to our families for their patience and understanding and would like to reassure them that we still prefer talking to them over ChatGPT. 我们还感谢家人的耐心和理解,并向他们保证我们仍然更喜欢与他们交谈而不是 ChatGPT。

1. The Five Principles Of Prompting

# Chapter 1. The Five Principles of Prompting

第一章 提示的五项原则

Prompt engineering is the process of discovering prompts that reliably yield useful or desired results.

提示工程是发现能够可靠地产生有用或期望结果的提示的过程。

A prompt is the input you provide, typically text, when interfacing with an AI model like ChatGPT or Midjourney. The prompt serves as a set of instructions the model uses to predict the desired response: text from large language models (LLMs) like ChatGPT, or images from diffusion models like Midjourney.

提示是您在与 ChatGPT 或 Midjourney 等 AI 模型交互时提供的输入,通常是文本。提示充当模型用于预测所需响应的一组指令:来自 ChatGPT 等大型语言模型 (LLMs) 的文本,或来自 Midjourney 等扩散模型的图像。

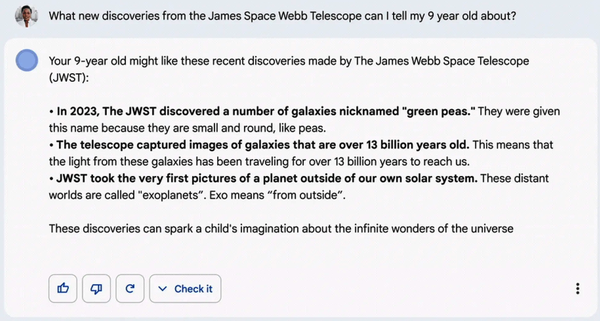

Here is a simple example of a prompt input for a product name generator (inspired by one of OpenAI’s examples), and the resulting output from ChatGPT.

下面是一个简单的产品名称生成器提示输入示例(受到 OpenAI 示例之一的启发),以及 ChatGPT 的结果输出。

Input: 输入:

Can I have a list of product names for a pair of shoes that

can fit any foot size?

Output: 输出:

Certainly! Here's a list of product names for a pair of shoes

that can fit any foot size:

UniFit SoleStrides FlexiSize All-Fit Shoes OmniFit FootFlex … AnyStep AdaptiWalkers FlexiRange UniversalSteps PerfectGlide MultiFit Shoes

This is already a remarkable response for a naive prompt, which feels like magic because we got here with very little effort. As the state-of-the-art models improve, the likelihood you will get good enough results on your first try goes up. For any throwaway interactions with an AI, where you don’t plan to do the same task again, the naive approach is all you need.

对于一个天真的提示来说,这已经是一个了不起的反应,这感觉就像魔术一样,因为我们几乎不费吹灰之力就到达了这里。随着最先进模型的改进,您在第一次尝试中获得足够好的结果的可能性就会增加。对于任何与人工智能的一次性交互,你不打算再次执行相同的任务,简单的方法就是你所需要的。

However, if you planned to put this prompt into production, you’d benefit from investing more work into getting it right. Mistakes cost you money in terms of the fees OpenAI charges based on the length of the prompt and response, as well as the time spent fixing mistakes. If you were building a product name generator with thousands of users, there are some obvious issues you’d want attempt to fix:

但是,如果您计划将此提示投入生产,那么投入更多的工作来使其正确,您将会受益匪浅。错误会导致您损失金钱,OpenAI 根据提示和响应的长度以及修复错误所花费的时间收取费用。如果您正在构建一个拥有数千名用户的产品名称生成器,那么您需要尝试修复一些明显的问题:

Vague direction 方向模糊

You’re not briefing the AI on what style of name you want, or what attributes it should have. Do you want a single word or a concatenation? Can the words be made up, or is it important that they’re in real English? Do you want the AI to emulate somebody you admire who is famous for great product names?

你不会向人工智能介绍你想要什么风格的名字,或者它应该具有什么属性。您想要单个单词还是串联单词?这些单词可以是虚构的吗?或者它们是真正的英语很重要吗?您是否希望人工智能模仿您所钦佩的以伟大产品名称而闻名的人?

Unformatted output 无格式输出

You’re getting back a list of separated names line by line, of unspecified length. When you run this prompt multiple times, you’ll see sometimes it comes back with a numbered list, and often it has text at the beginning, which makes it hard to parse programmatically.

您将逐行返回一个未指定长度的分隔名称列表。当您多次运行此提示时,您会看到有时它会返回一个编号列表,并且通常在开头有文本,这使得以编程方式解析变得困难。

Missing examples 缺少示例

You haven’t given the AI any examples of what good names look like. It’s autocompleting using an average of its training data, i.e., the entire internet (with all its inherent bias), but is that what you want? Ideally you’d feed it examples of successful names, common names in an industry, or even just other names you like.

你还没有给人工智能任何好名字的例子。它使用其训练数据的平均值(即整个互联网(及其所有固有的偏见))自动完成,但这就是您想要的吗?理想情况下,您可以向其提供成功名称、行业中常见名称的示例,甚至只是您喜欢的其他名称。

Limited evaluation 有限评价

You have no consistent or scalable way to define which names are good or bad, so you have to manually review each response. If you can institute a rating system or other form of measurement, you can optimize the prompt to get better results and identify how many times it fails.

您没有一致或可扩展的方法来定义哪些名称好或坏,因此您必须手动检查每个响应。如果您可以建立评级系统或其他形式的测量,您可以优化提示以获得更好的结果并确定失败的次数。

No task division 没有任务划分

You’re asking a lot of a single prompt here: there are lots of factors that go into product naming, and this important task is being naively outsourced to the AI all in one go, with no task specialization or visibility into how it’s handling this task for you.

你在这里问了很多单一提示:产品命名涉及很多因素,而这项重要任务被天真地一次性外包给人工智能,没有任务专门化或了解它如何处理这个问题给你的任务。

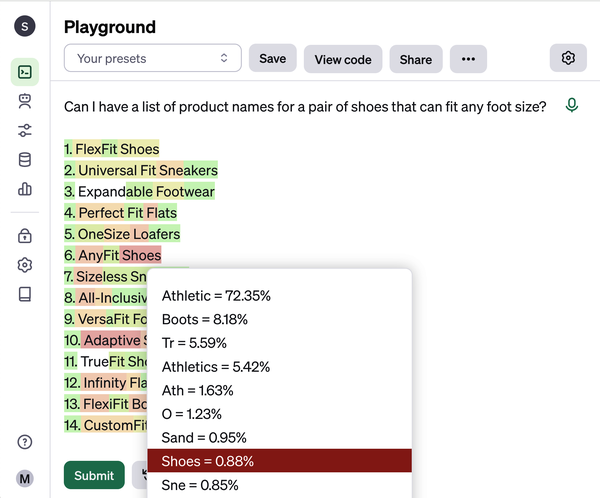

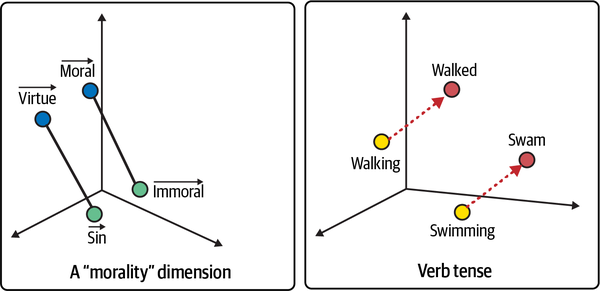

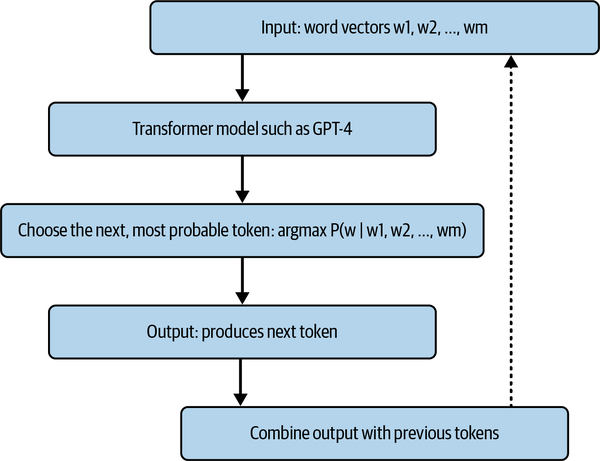

Addressing these problems is the basis for the core principles we use throughout this book. There are many different ways to ask an AI model to do the same task, and even slight changes can make a big difference. LLMs work by continuously predicting the next token (approximately three-fourths of a word), starting from what was in your prompt. Each new token is selected based on its probability of appearing next, with an element of randomness (controlled by the temperature parameter). As demonstrated in Figure 1-1, the word shoes had a lower probability of coming after the start of the name AnyFit (0.88%), where a more predictable response would be Athletic (72.35%).

解决这些问题是我们在本书中使用的核心原则的基础。要求人工智能模型完成相同任务的方法有很多种,即使是微小的改变也会产生很大的差异。 LLMs 从提示中的内容开始,不断预测下一个标记(大约四分之三的单词)。每个新令牌都是根据其接下来出现的概率进行选择的,并具有随机性(由温度参数控制)。如图 1-1 所示,“鞋”一词出现在 AnyFit 名称开头之后的概率较低 (0.88%),而更可预测的响应是“运动”(72.35%)。

Figure 1-1. How the response breaks down into tokens

图 1-1。响应如何分解为令牌

LLMs are trained on essentially the entire text of the internet, and are then further fine-tuned to give helpful responses. Average prompts will return average responses, leading some to be underwhelmed when their results don’t live up to the hype. What you put in your prompt changes the probability of every word generated, so it matters a great deal to the results you’ll get. These models have seen the best and worst of what humans have produced and are capable of emulating almost anything if you know the right way to ask. OpenAI charges based on the number of tokens used in the prompt and the response, so prompt engineers need to make these tokens count by optimizing prompts for cost, quality, and reliability.

LLMs 基本上接受了互联网整个文本的训练,然后进一步微调以提供有用的响应。一般的提示将返回一般的响应,导致一些人在结果不符合宣传时感到不知所措。你在提示中输入的内容会改变生成每个单词的概率,因此它对你得到的结果非常重要。这些模型已经看到了人类创造的最好和最差的东西,并且如果你知道正确的提问方式,它们几乎能够模拟任何东西。 OpenAI 根据提示和响应中使用的令牌数量进行收费,因此提示工程师需要通过优化提示的成本、质量和可靠性来使这些令牌计数。

Here’s the same example with the application of several prompt engineering techniques. We ask for names in the style of Steve Jobs, state that we want a comma-separated list, and supply examples of the task done well.

这是应用了几种快速工程技术的同一示例。我们以史蒂夫·乔布斯的风格询问姓名,声明我们想要一个以逗号分隔的列表,并提供出色完成任务的示例。

Input: 输入:

Brainstorm a list of product names for a shoe that fits any foot size, in the style of Steve Jobs.

Return the results as a comma-separated list, in this format: Product description: A shoe that fits any foot size Product names: [list of 3 product names]

Examples

Product description: A refrigerator that dispenses beer Product names: iBarFridge, iFridgeBeer, iDrinkBeerFridge

Product description: A watch that can tell accurate time in space Product names: iNaut, iSpace, iTime

Product description: A home milkshake maker Product names: iShake, iSmoothie, iShake Mini

Output: 输出:

Product description: A shoe that fits any foot size Product names: iFitFoot, iPerfectFit, iShoeSize

While no prompt is ever perfect, this prompt is optimized to reliably deliver solid product names in the right format. The user of your product name generator can choose somebody other than Steve Jobs to get the types of names they like, they can change the response format if needed, and the output of this prompt can become the input of another. Finally, you could periodically update the examples you use in the prompt based on user feedback, making your system smarter over time.

虽然没有任何提示是完美的,但此提示经过优化,可以以正确的格式可靠地提供可靠的产品名称。产品名称生成器的用户可以选择史蒂夫·乔布斯以外的其他人来获取他们喜欢的名称类型,如果需要,他们可以更改响应格式,并且此提示的输出可以成为另一个提示的输入。最后,您可以根据用户反馈定期更新提示中使用的示例,从而使您的系统随着时间的推移变得更加智能。

Overview of the Five Principles of Prompting

提示五项原则概述

The process for optimizing this prompt follows the Five Principles of Prompting, which we will dissect using this example in the remainder of this chapter, and recall throughout the book. They map exactly to the five issues we raised when discussing the naive text prompt. You’ll find references back to these principles throughout the rest of the book to help you connect the dots to how they’re used in practice. The Five Principles of Prompting are as follows:

优化这个提示的过程遵循提示的五项原则,我们将在本章的其余部分使用这个例子进行剖析,并在整本书中回顾。它们准确地反映了我们在讨论幼稚文本提示时提出的五个问题。在本书的其余部分中,您将找到对这些原则的引用,以帮助您将这些点与它们在实践中的使用方式联系起来。提示的五项原则如下:

Give Direction 给予指导

Describe the desired style in detail, or reference a relevant persona

详细描述所需的风格,或参考相关人物

Specify Format 指定格式

Define what rules to follow, and the required structure of the response

定义要遵循的规则以及所需的响应结构

Provide Examples 提供例子

Insert a diverse set of test cases where the task was done correctly

插入正确完成任务的一组不同的测试用例

Evaluate Quality 评估质量

Identify errors and rate responses, testing what drives performance.

识别错误并评估响应速度,测试驱动性能的因素。

Divide Labor 分工

Split tasks into multiple steps, chained together for complex goals

将任务分成多个步骤,链接在一起以实现复杂的目标

These principles are not short-lived tips or hacks but are generally accepted conventions that are useful for working with any level of intelligence, biological or artificial. These principles are model-agnostic and should work to improve your prompt no matter which generative text or image model you’re using. We first published these principles in July 2022 in the blog post “Prompt Engineering: From Words to Art and Copy”, and they have stood the test of time, including mapping quite closely to OpenAI’s own Prompt Engineering Guide, which came a year later. Anyone who works closely with generative AI models is likely to converge on a similar set of strategies for solving common issues, and throughout this book you’ll see hundreds of demonstrative examples of how they can be useful for improving your prompts.

这些原则不是短暂的技巧或窍门,而是普遍接受的约定,对于任何级别的智能(无论是生物智能还是人工智能)都非常有用。这些原则与模型无关,无论您使用哪种生成文本或图像模型,都应该能够改善您的提示。我们于 2022 年 7 月在博客文章“即时工程:从文字到艺术和复制”中首次发布了这些原则,它们经受住了时间的考验,包括与一年后发布的 OpenAI 自己的即时工程指南非常接近。任何与生成式人工智能模型密切合作的人都可能会采用一套类似的策略来解决常见问题,在本书中,您将看到数百个说明性示例,说明它们如何有助于改进您的提示。

We have provided downloadable one-pagers for text and image generation you can use as a checklist when applying these principles. These were created for our popular Udemy course The Complete Prompt Engineering for AI Bootcamp (70,000+ students), which was based on the same principles but with different material to this book.

我们提供了可下载的用于文本和图像生成的单页程序,您可以在应用这些原则时将其用作清单。这些是为我们流行的 Udemy 课程“AI 训练营的完整提示工程”(超过 70,000 名学生)创建的,该课程基于相同的原理,但与本书的材料不同。

To show these principles apply equally well to prompting image models, let’s use the following example, and explain how to apply each of the Five Principles of Prompting to this specific scenario. Copy and paste the entire input prompt into the Midjourney Bot in Discord, including the link to the image at the beginning, after typing **/imagine** to trigger the prompt box to appear (requires a free Discord account, and a paid Midjourney account).

为了表明这些原则同样适用于提示图像模型,让我们使用以下示例,并解释如何将提示的五项原则应用于此特定场景。将整个输入提示复制并粘贴到 Discord 中的 Midjourney Bot 中,包括开头的图像链接,输入 **/imagine** 后触发提示框出现(需要免费的 Discord 帐户和付费帐户)中途帐户)。

Input: 输入:

https://s.mj.run/TKAsyhNiKmc stock photo of business meeting of 4 people watching on white MacBook on top of glass-top table, Panasonic, DC-GH5

Figure 1-2 shows the output.

图 1-2 显示了输出。

Figure 1-2. Stock photo of business meeting

图 1-2。商务会议的股票照片

This prompt takes advantage of Midjourney’s ability to take a base image as an example by uploading the image to Discord and then copy and pasting the URL into the prompt (https://s.mj.run/TKAsyhNiKmc), for which the royalty-free image from Unsplash is used (Figure 1-3). If you run into an error with the prompt, try uploading the image yourself and reviewing Midjourney’s documentation for any formatting changes.

此提示利用 Midjourney 的功能,以基本图像为例,将图像上传到 Discord,然后将 URL 复制并粘贴到提示中 (https://s.mj.run/TKAsyhNiKmc),为此,版税 -使用 Unsplash 的免费图像(图 1-3)。如果您遇到提示错误,请尝试自行上传图像并查看 Midjourney 的文档以了解任何格式更改。

Figure 1-3. Photo by Mimi Thian on Unsplash

图 1-3。照片由 Unsplash 上的 Mimi Thian 拍摄

Let’s compare this well-engineered prompt to what you get back from Midjourney if you naively ask for a stock photo in the simplest way possible. Figure 1-4 shows an example of what you get without prompt engineering, an image with a darker, more stylistic take on a stock photo than you’d typically expect.

让我们将这个精心设计的提示与您从中途天真地以最简单的方式索要库存照片时得到的提示进行比较。图 1-4 展示了您无需立即进行工程处理即可获得的示例,即与库存照片相比,图像的颜色比您通常预期的更暗、更具风格。

Input: 输入:

people in a business meeting

Figure 1-4 shows the output.

图 1-4 显示了输出。

Although less prominent an issue in v5 of Midjourney onwards, community feedback mechanisms (when users select an image to resize to a higher resolution, that choice may be used to train the model) have reportedly biased the model toward a fantasy aesthetic, which is less suitable for the stock photo use case. The early adopters of Midjourney came from the digital art world and naturally gravitated toward fantasy and sci-fi styles, which can be reflected in the results from the model even when this aesthetic is not suitable.

尽管在 Midjourney 的 v5 版本中这个问题不太突出,但据报道,社区反馈机制(当用户选择将图像大小调整为更高分辨率时,该选择可能会用于训练模型)使模型偏向于幻想美学,这是较少的适合库存照片用例。 Midjourney 的早期采用者来自数字艺术世界,自然偏向奇幻和科幻风格,即使这种审美并不适合,这也可以反映在模型的结果中。

Figure 1-4. People in a business meeting

图 1-4。商务会议中的人们

Throughout this book the examples used will be compatiable with ChatGPT Plus (GPT-4) as the text model and Midjourney v6 or Stable Diffusion XL as the image model, though we will specify if it’s important. These foundational models are the current state of the art and are good at a diverse range of tasks. The principles are intended to be future-proof as much as is possible, so if you’re reading this book when GPT-5, Midjourney v7, or Stable Diffusion XXL is out, or if you’re using another vendor like Google, everything you learn here should still prove useful.

本书中使用的示例将与作为文本模型的 ChatGPT Plus (GPT-4) 和作为图像模型的 Midjourney v6 或 Stable Diffusion XL 兼容,尽管我们将指定它是否重要。这些基础模型是当前最先进的模型,擅长执行各种任务。这些原则旨在尽可能面向未来,因此,如果您在 GPT-5、Midjourney v7 或 Stable Diffusion XXL 发布时阅读本书,或者如果您正在使用 Google 等其他供应商,那么一切你在这里学到的应该还是有用的。

1. Give Direction 1. 给予指导

One of the issues with the naive text prompt discussed earlier was that it wasn’t briefing the AI on what types of product names you wanted. To some extent, naming a product is a subjective endeavor, and without giving the AI an idea of what names you like, it has a low probability of guessing right.

前面讨论的天真的文本提示的问题之一是它没有向人工智能简要介绍您想要什么类型的产品名称。在某种程度上,为产品命名是一种主观努力,如果不让人工智能知道你喜欢什么名字,它猜对的可能性很低。

By the way, a human would also struggle to complete this task without a good brief, which is why creative and branding agencies require a detailed briefing on any task from their clients.

顺便说一句,如果没有良好的简报,人类也很难完成这项任务,这就是为什么创意和品牌机构需要客户提供有关任何任务的详细简报的原因。

TIP

Although it’s not a perfect mapping, it can be helpful to imagine what context a human might need for this task and try including it in the prompt.

尽管这不是一个完美的映射,但想象一下人类可能需要什么上下文来完成此任务并尝试将其包含在提示中可能会有所帮助。

In the example prompt we gave direction through the use of role-playing, in that case emulating the style of Steve Jobs, who was famous for iconically naming products. If you change this aspect of the prompt to someone else who is famous in the training data (as well as matching the examples to the right style), you’ll get dramatically different results.

在示例提示中,我们通过使用角色扮演来给出指导,在这种情况下模仿史蒂夫·乔布斯的风格,他以标志性的产品命名而闻名。如果您将提示的这方面更改为训练数据中著名的其他人(以及将示例与正确的风格相匹配),您将得到截然不同的结果。

Input: 输入:

Brainstorm a list of product names for a shoe that fits any foot size, in the style of Elon Musk.

Return the results as a comma-separated list, in this format: Product description: A shoe that fits any foot size Product names: [list of 3 product names]

Examples

Product description: A refrigerator that dispenses beer Product names: Teslacool, FridgeX, Neuradrink

Product description: A watch that can tell accurate time in space Product names: WatchX, iSpace, The Watch Company

Product description: A home milkshake maker Product names: ShakeX, The Milkshake Company, HyperShake

Output: 输出:

ShoeX, MarsFit, The Shoe Company

There are also some rules or best practices you would do well to follow, which could be included in the prompt as context to guide the AI toward a name that works. This technique is sometimes referred to as prewarming or internal retrieval, and it is simple but effective (Liu et al., 2021). Starting the conversation asking for best practice advice, then asking it to follow its own advice, can help a lot. In effect, you are using it to generate its own direction.

还有一些您最好遵循的规则或最佳实践,它们可以作为上下文包含在提示中,以指导人工智能找到有效的名称。这种技术有时被称为预热或内部检索,它简单但有效(Liu et al., 2021)。开始对话时寻求最佳实践建议,然后要求其遵循自己的建议,这会很有帮助。实际上,您正在使用它来生成自己的方向。

Input: 输入:

Please give me 5 tips for naming products based on expert industry advice.

Output: 输出:

Certainly! Naming a product is crucial as it often gives the first impression to potential customers. Here are five expert tips to guide you in naming products:

- Simplicity & Memorability: Opt for names that are short, easy to spell, and memorable. Long, complicated names can be difficult for customers to recall. Remember, the easier the name is to remember, the more likely it is that consumers will think of your product when making a purchase decision.

…

- Positive Connotations: Names that evoke positive emotions or imagery can create a favorable perception of your product. Avoid names that have negative or controversial associations in any of the primary languages or cultures where you intend to do business. It’s often worthwhile to conduct linguistic checks and market testing to ensure there aren’t unintended meanings.

Lastly, once you’ve shortlisted a few names, it’s a good idea to run them by diverse focus groups or conduct surveys to gauge reactions. Also, ensure that the name you choose is available for trademarking and doesn’t infringe on existing trademarks.

Then within the same chat window, where the model has the context of the past advice it gave, you ask your initial prompt for the task you wanted to complete.

然后在同一个聊天窗口中,模型具有它所提供的过去建议的上下文,您可以询问您想要完成的任务的初始提示。

Input: 输入:

Using this advice, can I have a list of product names for a pair of shoes that can fit any foot size?

Output: 输出:

Certainly! Based on the criteria given:

- Simplicity & Memorability

- Relevance & Descriptiveness

- Differentiation & Uniqueness

- Adaptable & Scalable

- Positive Connotations

Here’s a list of potential product names for shoes that can fit any foot size:

- FitAll Walkers: A simple and memorable name that instantly suggests a universal fit.

…

- TrueFit Universals: Emphasizing genuine comfort and fit for all.

Before finalizing any name, remember to check for trademarks to ensure there’s no conflict with existing products or brands.

Another fruitful strategy in our experience is to take the best advice out there for the task you want to accomplish and insert that context into the prompt. For example, you could take Brandwatch’s 5 Golden Rules for naming a product or another trusted external resource you find, and insert that as context into the prompt. This will increase the length of the prompt significantly, which costs more money (when using the API as a developer), but may be worth the trade-off if the quality of the response improves.

根据我们的经验,另一个富有成效的策略是针对您想要完成的任务获取最佳建议,并将该上下文插入提示中。例如,您可以采用 Brandwatch 的 5 条黄金法则来命名您找到的产品或其他受信任的外部资源,并将其作为上下文插入到提示中。这将显着增加提示的长度,这会花费更多的钱(当作为开发人员使用 API 时),但如果响应的质量提高,可能值得权衡。

Input: 输入:

Can I have a list of product names for a pair of shoes that can fit any foot size? Please follow the five rules below:

- It should be readable and writable. If your product name is hard to pronounce, people won’t talk about it, and if they can’t write it down (and spell it correctly!) when they hear it, how do you expect them to Google it?

Keep it simple and don’t go with any wacky spellings just for the sake of it.

…

- It should evoke an emotion, feeling, or idea. Your product name should tie back into what your product is, what the feeling you want people to have when experiencing your product is, and/or what idea are you trying to get across.

It should be emotive and inspiring.

Output: 输出:

- FlexFit Footwear

- OmniSize Sneakers

- AdaptStride Shoes …

- OmniComfort Kicks

- FlexSize Footwear

- Boundless Soles

There are other myriad ways of providing direction. In the image generation example, direction was given by specifying that the business meeting is taking place around a glass-top table. If you change only that detail, you can get a completely different image, as detailed in Figure 1-5.

还有其他无数种提供指导的方法。在图像生成示例中,通过指定商务会议在玻璃顶桌子周围举行来给出方向。如果仅更改该细节,您可以获得完全不同的图像,如图 1-5 所示。

Input: 输入:

https://s.mj.run/TKAsyhNiKmc stock photo of business meeting of four people gathered around a campfire outdoors in the woods, Panasonic, DC-GH5

Figure 1-5 shows the output.

图 1-5 显示了输出。

Figure 1-5. Stock photo of business meeting in the woods

图 1-5。在树林里举行商务会议的股票照片

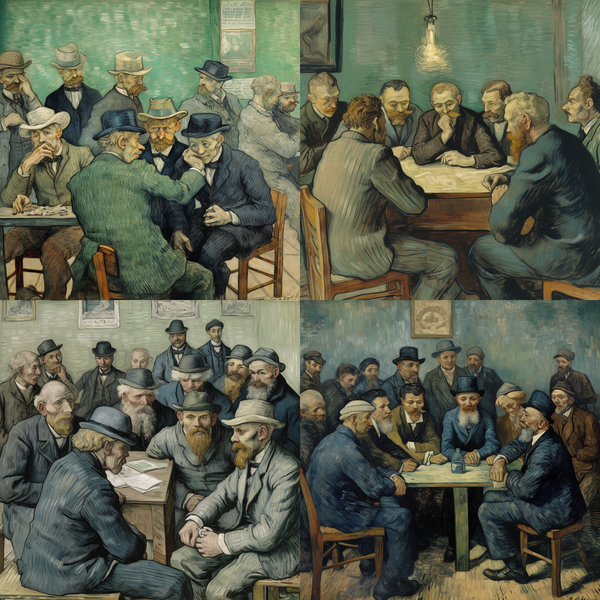

Role-playing is also important for image generation, and one of the quite powerful ways you can give Midjourney direction is to supply the name of an artist or art style to emulate. One artist that features heavily in the AI art world is Van Gogh, known for his bold, dramatic brush strokes and vivid use of colors. Watch what happens when you include his name in the prompt, as shown in Figure 1-6.

角色扮演对于图像生成也很重要,为中途提供指导的一种非常有效的方法是提供要模仿的艺术家或艺术风格的名字。梵高是人工智能艺术界中一位举足轻重的艺术家,他以其大胆、戏剧性的笔触和生动的色彩运用而闻名。观察当您在提示中包含他的名字时会发生什么,如图 1-6 所示。

Input: 输入:

people in a business meeting, by Van Gogh

Figure 1-6 shows the output.

图 1-6 显示了输出。

Figure 1-6. People in a business meeting, by Van Gogh

图 1-6。参加商务会议的人们,梵高

To get that last prompt to work, you need to strip back a lot of the other direction. For example, losing the base image and the words stock photo as well as the camera Panasonic, DC-GH5 helps bring in Van Gogh’s style. The problem you may run into is that often with too much direction, the model can quickly get to a conflicting combination that it can’t resolve. If your prompt is overly specific, there might not be enough samples in the training data to generate an image that’s consistent with all of your criteria. In cases like these, you should choose which element is more important (in this case, Van Gogh) and defer to that.

为了让最后一个提示起作用,你需要去掉很多其他方向的内容。例如,去掉底图和stock photo字样以及松下相机,DC-GH5有助于引入梵高的风格。您可能遇到的问题是,通常方向太多,模型很快就会出现无法解决的冲突组合。如果您的提示过于具体,训练数据中可能没有足够的样本来生成符合您所有标准的图像。在这种情况下,您应该选择哪个元素更重要(在本例中是梵高)并遵循它。

Direction is one of the most commonly used and broadest principles. It can take the form of simply using the right descriptive words to clarify your intent, or channeling the personas of relevant business celebrities. While too much direction can narrow the creativity of the model, too little direction is the more common problem.

方向是最常用和最广泛的原则之一。它可以采取简单地使用正确的描述性词语来阐明您的意图的形式,或者引导相关商业名人的角色。虽然太多的方向会缩小模型的创造力,但方向太少是更常见的问题。

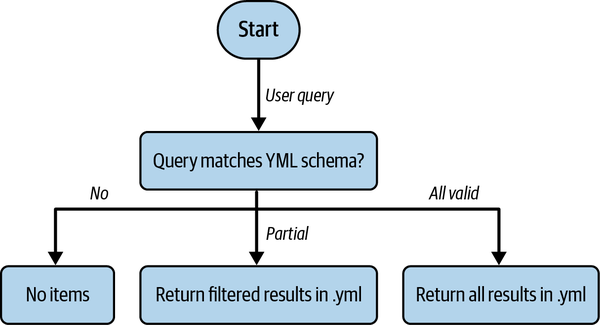

2. Specify Format 2. 指定格式

AI models are universal translators. Not only does that mean translating from French to English, or Urdu to Klingon, but also between data structures like JSON to YAML, or natural language to Python code. These models are capable of returning a response in almost any format, so an important part of prompt engineering is finding ways to specify what format you want the response to be in.

人工智能模型是通用翻译器。这不仅意味着从法语到英语、或从乌尔都语到克林贡语的翻译,还意味着在 JSON 到 YAML 等数据结构之间的翻译,或者从自然语言到 Python 代码的翻译。这些模型能够以几乎任何格式返回响应,因此提示工程的一个重要部分是找到方法来指定您希望响应采用的格式。

Every now and again you’ll find that the same prompt will return a different format, for example, a numbered list instead of comma separated. This isn’t a big deal most of the time, because most prompts are one-offs and typed into ChatGPT or Midjourney. However, when you’re incorporating AI tools into production software, occasional flips in format can cause all kinds of errors.

您时不时会发现相同的提示会返回不同的格式,例如,编号列表而不是逗号分隔。大多数时候这并不是什么大问题,因为大多数提示都是一次性的,并输入 ChatGPT 或 Midjourney 中。然而,当您将人工智能工具整合到生产软件中时,偶尔的格式翻转可能会导致各种错误。

Just like when working with a human, you can avoid wasted effort by specifying up front the format you expect the response to be in. For text generation models, it can often be helpful to output JSON instead of a simple ordered list because that’s the universal format for API responses, which can make it simpler to parse and spot errors, as well as to use to render the front-end HTML of an application. YAML is also another popular choice because it enforces a parseable structure while still being simple and human-readable.

就像与人合作时一样,您可以通过预先指定您期望响应的格式来避免浪费精力。对于文本生成模型,输出 JSON 而不是简单的有序列表通常会很有帮助,因为这是通用的API 响应的格式,可以更轻松地解析和发现错误,以及用于呈现应用程序的前端 HTML。 YAML 也是另一个流行的选择,因为它强制执行可解析的结构,同时仍然简单且易于阅读。

In the original prompt you gave direction through both the examples provided, and the colon at the end of the prompt indicated it should complete the list inline. To swap the format to JSON, you need to update both and leave the JSON uncompleted, so GPT-4 knows to complete it.

在原始提示中,您通过提供的两个示例给出了指示,提示末尾的冒号表示它应该内联完成列表。要将格式交换为 JSON,您需要更新两者并保留 JSON 不完整,以便 GPT-4 知道要完成它。

Input: 输入:

Return a comma-separated list of product names in JSON for “A pair of shoes that can fit any foot size.”. Return only JSON.

Examples: [{ “Product description”: “A home milkshake maker.”, “Product names”: [“HomeShaker”, “Fit Shaker”, “QuickShake”, “Shake Maker”] }, { “Product description”: “A watch that can tell accurate time in space.”, “Product names”: [“AstroTime”, “SpaceGuard”, “Orbit-Accurate”, “EliptoTime”]} ]

Output: 输出:

[

{

“Product description”: “A pair of shoes that can

fit any foot size.”,

“Product names”: [“FlexFit Footwear”, “OneSize Step”,

“Adapt-a-Shoe”, “Universal Walker”]

}

]

The output we get back is the completed JSON containing the product names. This can then be parsed and used programmatically, in an application or local script. It’s also easy from this point to check if there’s an error in the formatting using a JSON parser like Python’s standard json library, because broken JSON will result in a parsing error, which can act as a trigger to retry the prompt or investigate before continuing. If you’re still not getting the right format back, it can help to specify at the beginning or end of the prompt, or in the system message if using a chat model: You are a helpful assistant that only responds in JSON, or specify JSON output in the model parameters where available (this is called grammars with Llama models.

我们得到的输出是包含产品名称的完整 JSON。然后可以在应用程序或本地脚本中以编程方式解析和使用它。从现在起,使用 JSON 解析器(例如 Python 的标准 json 库)检查格式是否存在错误也很容易,因为损坏的 JSON 会导致解析错误,这可以作为触发器,在继续之前重试提示或进行调查。如果您仍然没有得到正确的格式,它可以帮助您在提示的开头或结尾指定,或者在系统消息中指定(如果使用聊天模型): You are a helpful assistant that only responds in JSON ,或者在中指定 JSON 输出可用的模型参数(这称为 Llama 模型的语法。

TIP

To get up to speed on JSON if you’re unfamiliar, W3Schools has a good introduction.

如果您不熟悉 JSON,为了快速了解 JSON,W3Schools 有一个很好的介绍。

For image generation models, format is very important, because the opportunities for modifying an image are near endless. They range from obvious formats like stock photo, illustration, and oil painting, to more unusual formats like dashcam footage, ice sculpture, or in Minecraft (see Figure 1-7).

对于图像生成模型,格式非常重要,因为修改图像的机会几乎是无穷无尽的。它们的范围从明显的格式(如 stock photo 、 illustration 和 oil painting )到更不寻常的格式(如 dashcam footage 、 ice sculpture (参见图 1-7)。

Input: 输入:

business meeting of four people watching on MacBook on top of table, in Minecraft

Figure 1-7 shows the output.

图 1-7 显示了输出。

Figure 1-7. Business meeting in Minecraft

图 1-7。 Minecraft 中的商务会议

When setting a format, it is often necessary to remove other aspects of the prompt that might clash with the specified format. For example, if you supply a base image of a stock photo, the result is some combination of stock photo and the format you wanted. To some degree, image generation models can generalize to new scenarios and combinations they haven’t seen before in their training set, but in our experience, the more layers of unrelated elements, the more likely you are to get an unsuitable image.

设置格式时,通常需要删除可能与指定格式冲突的提示的其他方面。例如,如果您提供库存照片的基本图像,则结果是库存照片和您想要的格式的某种组合。在某种程度上,图像生成模型可以泛化到他们以前在训练集中从未见过的新场景和组合,但根据我们的经验,不相关元素的层数越多,获得不合适图像的可能性就越大。

There is often some overlap between the first and second principles, Give Direction and Specify Format. The latter is about defining what type of output you want, for example JSON format, or the format of a stock photo. The former is about the style of response you want, independent from the format, for example product names in the style of Steve Jobs, or an image of a business meeting in the style of Van Gogh. When there are clashes between style and format, it’s often best to resolve them by dropping whichever element is less important to your final result.

第一原则和第二原则(给出方向和指定格式)之间经常有一些重叠。后者是关于定义您想要的输出类型,例如 JSON 格式或库存照片的格式。前者是关于您想要的响应风格,与格式无关,例如史蒂夫·乔布斯风格的产品名称,或梵高风格的商务会议图像。当风格和格式之间存在冲突时,通常最好通过删除对最终结果不太重要的元素来解决它们。

3. Provide Examples 3. 提供例子

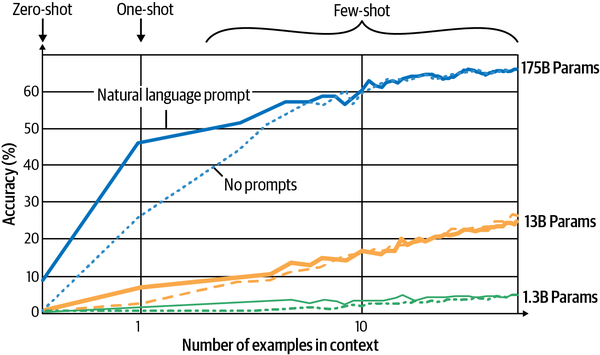

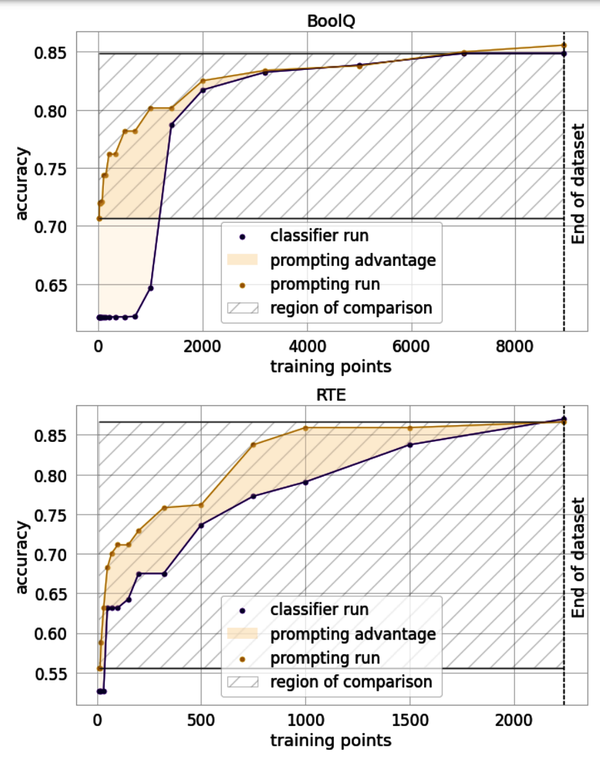

The original prompt didn’t give the AI any examples of what you think good names look like. Therefore, the response is approximate to an average of the internet, and you can do better than that. Researchers would call a prompt with no examples zero-shot, and it’s always a pleasant surprise when AI can even do a task zero shot: it’s a sign of a powerful model. If you’re providing zero examples, you’re asking for a lot without giving much in return. Even providing one example (one-shot) helps considerably, and it’s the norm among researchers to test how models perform with multiple examples (few-shot). One such piece of research is the famous GPT-3 paper “Language Models are Few-Shot Learners”, the results of which are illustrated in Figure 1-8, showing adding one example along with a prompt can improve accuracy in some tasks from 10% to near 50%!

最初的提示并没有给人工智能任何你认为好名字是什么样子的例子。因此,响应近似于互联网的平均水平,您可以做得更好。研究人员将没有示例的提示称为零样本,当人工智能甚至可以完成零样本任务时,总是令人惊喜:这是一个强大模型的标志。如果你提供的例子为零,那么你就要求很多却没有给予太多回报。即使提供一个示例(一次性)也会有很大帮助,并且研究人员使用多个示例(几次)来测试模型的表现是一种常态。其中一项研究是著名的 GPT-3 论文“Language Models are Few-Shot Learners”,其结果如图 1-8 所示,显示添加一个示例和提示可以将某些任务的准确性从 10 提高到 10。 % 接近 50%!

Figure 1-8. Number of examples in context

图 1-8。上下文中的示例数量

When briefing a colleague or training a junior employee on a new task, it’s only natural that you’d include examples of times that task had previously been done well. Working with AI is the same, and the strength of a prompt often comes down to the examples used. Providing examples can sometimes be easier than trying to explain exactly what it is about those examples you like, so this technique is most effective when you are not a domain expert in the subject area of the task you are attempting to complete. The amount of text you can fit in a prompt is limited (at the time of writing around 6,000 characters on Midjourney and approximately 32,000 characters for the free version of ChatGPT), so a lot of the work of prompt engineering involves selecting and inserting diverse and instructive examples.

当向同事介绍新任务或对初级员工进行新任务培训时,您很自然地会列举之前完成该任务的例子。使用人工智能也是一样,提示的强度通常取决于所使用的示例。提供示例有时比尝试准确解释您喜欢的示例更容易,因此当您不是要完成的任务的主题领域的领域专家时,此技术最有效。提示中可以容纳的文本量是有限的(在 Midjourney 上编写时约为 6,000 个字符,在 ChatGPT 免费版本中约为 32,000 个字符),因此提示工程的大量工作涉及选择和插入各种不同的文本。具有指导意义的例子。

There’s a trade-off between reliability and creativity: go past three to five examples and your results will become more reliable, while sacrificing creativity. The more examples you provide, and the lesser the diversity between them, the more constrained the response will be to match your examples. If you change all of the examples to animal names in the previous prompt, you’ll have a strong effect on the response, which will reliably return only names including animals.

可靠性和创造力之间需要权衡:经过三到五个例子,你的结果会变得更加可靠,但会牺牲创造力。您提供的示例越多,它们之间的多样性越小,响应与您的示例相匹配的限制就越大。如果您将上一个提示中的所有示例更改为动物名称,将对响应产生很大影响,该响应将可靠地仅返回包括动物的名称。

Input: 输入:

Brainstorm a list of product names for a shoe that fits any foot size.

Return the results as a comma-separated list, in this format: Product description: A shoe that fits any foot size Product names: [list of 3 product names]

Examples:

Product description: A home milkshake maker. Product names: Fast Panda, Healthy Bear, Compact Koala

Product description: A watch that can tell accurate time in space. Product names: AstroLamb, Space Bear, Eagle Orbit

Product description: A refrigerator that dispenses beer Product names: BearFridge, Cool Cat, PenguinBox

Output: 输出:

Product description: A shoe that fits any foot size Product names: FlexiFox, ChameleonStep, PandaPaws

Of course this runs the risk of missing out on returning a much better name that doesn’t fit the limited space left for the AI to play in. Lack of diversity and variation in examples is also a problem in handling edge cases, or uncommon scenarios. Including one to three examples is easy and almost always has a positive effect, but above that number it becomes essential to experiment with the number of examples you include, as well as the similarity between them. There is some evidence (Hsieh et al., 2023) that direction works better than providing examples, and it typically isn’t straightforward to collect good examples, so it’s usually prudent to attempt the principle of Give Direction first.

当然,这存在着错过返回一个更好的名称的风险,该名称不适合人工智能发挥作用的有限空间。示例中缺乏多样性和变化也是处理边缘情况或不常见场景的问题。包含一到三个示例很容易,并且几乎总是会产生积极的效果,但超过这个数字,就必须尝试包含的示例数量以及它们之间的相似性。有一些证据(Hsieh 等人,2023)表明指导比提供示例更有效,而且收集好的示例通常并不容易,因此首先尝试“给予指导”原则通常是谨慎的。

In the image generation space, providing examples usually comes in the form of providing a base image in the prompt, called img2img in the open source Stable Diffusion community. Depending on the image generation model being used, these images can be used as a starting point for the model to generate from, which greatly affects the results. You can keep everything about the prompt the same but swap out the provided base image for a radically different effect, as in Figure 1-9.

在图像生成领域,提供示例通常以在提示中提供基础图像的形式出现,在开源 Stable Diffusion 社区中称为 img2img。根据所使用的图像生成模型,这些图像可以用作模型生成的起点,这极大地影响结果。您可以保持提示的所有内容相同,但将提供的基本图像替换为完全不同的效果,如图 1-9 所示。

Input: 输入:

stock photo of business meeting of 4 people watching on white MacBook on top of glass-top table, Panasonic, DC-GH5

Figure 1-9 shows the output.

图 1-9 显示了输出。

Figure 1-9. Stock photo of business meeting of four people

图 1-9。四人商务会议图库照片

In this case, by substituting for the image shown in Figure 1-10, also from Unsplash, you can see how the model was pulled in a different direction and incorporates whiteboards and sticky notes now.

在本例中,通过替换同样来自 Unsplash 的图 1-10 中所示的图像,您可以看到模型如何被拉向不同的方向,并且现在如何合并白板和便签。

CAUTION 警告

These examples demonstrate the capabilities of image generation models, but we would exercise caution when uploading base images for use in prompts. Check the licensing of the image you plan to upload and use in your prompt as the base image, and avoid using clearly copyrighted images. Doing so can land you in legal trouble and is against the terms of service for all the major image generation model providers.

这些示例演示了图像生成模型的功能,但我们在上传用于提示的基础图像时要小心。检查您计划上传并在提示中用作基础图像的图像的许可,并避免使用明显受版权保护的图像。这样做可能会给您带来法律麻烦,并且违反所有主要图像生成模型提供商的服务条款。

Figure 1-10. Photo by Jason Goodman on Unsplash

图 1-10。杰森·古德曼 (Jason Goodman) 在 Unsplash 上拍摄的照片

4. Evaluate Quality 4. 评估质量

As of yet, there has been no feedback loop to judge the quality of your responses, other than the basic trial and error of running the prompt and seeing the results, referred to as blind prompting. This is fine when your prompts are used temporarily for a single task and rarely revisited. However, when you’re reusing the same prompt multiple times or building a production application that relies on a prompt, you need to be more rigorous with measuring results.

到目前为止,除了运行提示并查看结果的基本尝试和错误(称为盲目提示)之外,还没有反馈循环来判断您的回答质量。当您的提示暂时用于单个任务并且很少重新访问时,这很好。但是,当您多次重复使用相同的提示或构建依赖于提示的生产应用程序时,您需要更加严格地测量结果。

There are a number of ways performance can be evaluated, and it depends largely on what tasks you’re hoping to accomplish. When a new AI model is released, the focus tends to be on how well the model did on evals (evaluations), a standardized set of questions with predefined answers or grading criteria that are used to test performance across models. Different models perform differently across different types of tasks, and there is no guarantee a prompt that worked previously will translate well to a new model. OpenAI has made its evals framework for benchmarking performance of LLMs open source and encourages others to contribute additional eval templates.

评估绩效的方法有很多种,这在很大程度上取决于您希望完成的任务。当新的人工智能模型发布时,人们关注的焦点往往是该模型在评估(eval)方面的表现如何,评估是一组带有预定义答案或评分标准的标准化问题,用于测试跨模型的性能。不同的模型在不同类型的任务中表现不同,并且不能保证以前有效的提示能够很好地转换为新模型。 OpenAI 已将其用于 LLMs 性能基准测试的评估框架开源,并鼓励其他人贡献更多评估模板。

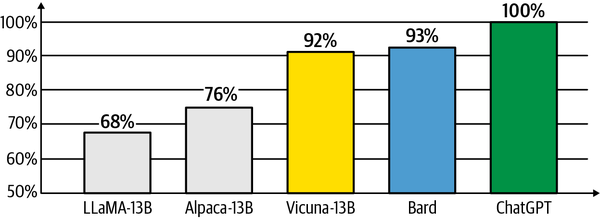

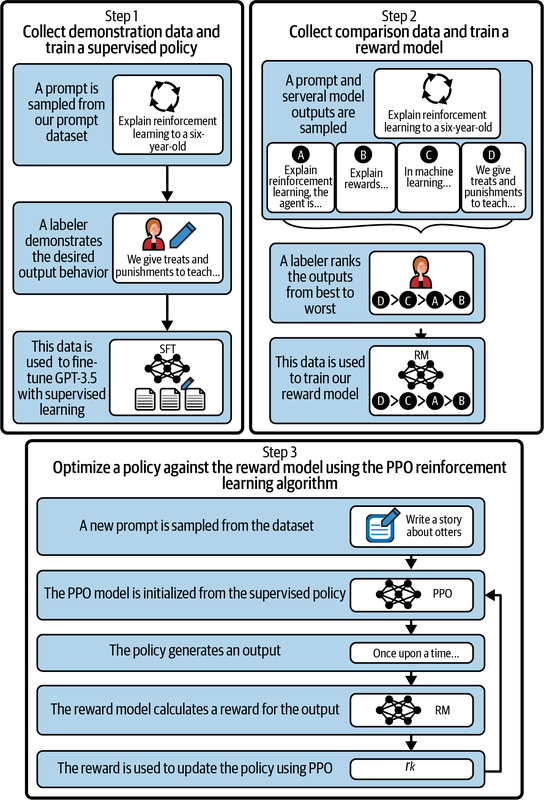

In addition to the standard academic evals, there are also more headline-worthy tests like GPT-4 passing the bar exam. Evaluation is difficult for more subjective tasks, and can be time-consuming or prohibitively costly for smaller teams. In some instances researchers have turned to using more advanced models like GPT-4 to evaluate responses from less sophisticated models, as was done with the release of Vicuna-13B, a fine-tuned model based on Meta’s Llama open source model (see Figure 1-11).

除了标准的学术评估之外,还有更多值得关注的测试,例如通过律师资格考试的 GPT-4。对于更主观的任务来说,评估很困难,对于较小的团队来说,评估可能非常耗时或成本高昂。在某些情况下,研究人员转向使用 GPT-4 等更先进的模型来评估不太复杂的模型的响应,就像发布 Vicuna-13B 所做的那样,Vicuna-13B 是一个基于 Meta 的 Llama 开源模型的微调模型(见图 1) -11)。

Figure 1-11. Vicuna GPT-4 Evals

图 1-11。骆驼毛 GPT-4 评估

More rigorous evaluation techniques are necessary when writing scientific papers or grading a new foundation model release, but often you will only need to go just one step above basic trial and error. You may find that a simple thumbs-up/thumbs-down rating system implemented in a Jupyter Notebook can be enough to add some rigor to prompt optimization, without adding too much overhead. One common test is to see whether providing examples is worth the additional cost in terms of prompt length, or whether you can get away with providing no examples in the prompt. The first step is getting responses for multiple runs of each prompt and storing them in a spreadsheet, which we will do after setting up our environment.

在撰写科学论文或对新的基础模型版本进行评分时,需要更严格的评估技术,但通常您只需要在基本的试错之上再迈出一步。您可能会发现,在 Jupyter Notebook 中实现的简单的赞成/反对评级系统足以为提示优化添加一些严格性,而不会增加太多开销。一种常见的测试是看看提供示例是否值得在提示长度方面付出额外的成本,或者您是否可以在提示中不提供示例。第一步是获取每个提示多次运行的响应并将其存储在电子表格中,我们将在设置环境后执行此操作。

You can install the OpenAI Python package with pip install openai. If you’re running into compatability issues with this package, create a virtual environment and install our requirements.txt (instructions in the preface).

您可以使用 pip install openai 安装 OpenAI Python 包。如果您遇到此软件包的兼容性问题,请创建一个虚拟环境并安装我们的requirements.txt(前言中的说明)。

To utilize the API, you’ll need to create an OpenAI account and then navigate here for your API key.

要使用该 API,您需要创建一个 OpenAI 帐户,然后在此处导航以获取您的 API 密钥。

WARNING 警告

Hardcoding API keys in scripts is not recommended due to security reasons. Instead, utilize environment variables or configuration files to manage your keys.

出于安全原因,不建议在脚本中对 API 密钥进行硬编码。相反,利用环境变量或配置文件来管理您的密钥。

Once you have an API key, it’s crucial to assign it as an environment variable by executing the following command, replacing api_key with your actual API key value:

获得 API 密钥后,执行以下命令将其分配为环境变量至关重要,并将 api_key 替换为您的实际 API 密钥值:

export

Or on Windows: 或者在 Windows 上:

set

Alternatively, if you’d prefer not to preset an API key, then you can manually set the key while initializing the model, or load it from an .env file using python-dotenv. First, install the library with pip install python-dotenv, and then load the environment variables with the following code at the top of your script or notebook:

或者,如果您不想预设 API 密钥,则可以在初始化模型时手动设置密钥,或使用 python-dotenv 从 .env 文件加载它。首先,使用 pip install python-dotenv 安装库,然后在脚本或笔记本顶部使用以下代码加载环境变量:

from

The first step is getting responses for multiple runs of each prompt and storing them in a spreadsheet.

第一步是获取每个提示多次运行的响应并将其存储在电子表格中。

Input: 输入:

# Define two variants of the prompt to test zero-shot

Output: 输出:

variant prompt

0 A Product description: A pair of shoes that can …

1 A Product description: A pair of shoes that can …

2 A Product description: A pair of shoes that can …

3 A Product description: A pair of shoes that can …

4 A Product description: A pair of shoes that can …

5 B Product description: A home milkshake maker.\n…

6 B Product description: A home milkshake maker.\n…

7 B Product description: A home milkshake maker.\n…

8 B Product description: A home milkshake maker.\n…

9 B Product description: A home milkshake maker.\n…

response

0 1. Adapt-a-Fit Shoes \n2. Omni-Fit Footwear \n… 1 1. OmniFit Shoes\n2. Adapt-a-Sneaks \n3. OneFi… 2 1. Adapt-a-fit\n2. Flexi-fit shoes\n3. Omni-fe… 3 1. Adapt-A-Sole\n2. FitFlex\n3. Omni-FitX\n4. … 4 1. Omni-Fit Shoes\n2. Adapt-a-Fit Shoes\n3. An… 5 Adapt-a-Fit, Perfect Fit Shoes, OmniShoe, OneS… 6 FitAll, OmniFit Shoes, SizeLess, AdaptaShoes 7 AdaptaFit, OmniShoe, PerfectFit, AllSizeFit. 8 FitMaster, AdaptoShoe, OmniFit, AnySize Footwe… 9 Adapt-a-Shoe, PerfectFit, OmniSize, FitForm

Here we’re using the OpenAI API to generate model responses to a set of prompts and storing the results in a dataframe, which is saved to a CSV file. Here’s how it works:

在这里,我们使用 OpenAI API 生成对一组提示的模型响应,并将结果存储在数据框中,该数据框保存到 CSV 文件中。它的工作原理如下:

Two prompt variants are defined, and each variant consists of a product description, seed words, and potential product names, but

prompt_Bprovides two examples.

定义了两个提示变体,每个变体由产品描述、种子词和潜在产品名称组成,但prompt_B提供了两个示例。Import statements are called for the Pandas library, OpenAI library, and os library.

Pandas 库、OpenAI 库和 os 库调用导入语句。The

get_responsefunction takes a prompt as input and returns a response from thegpt-3.5-turbomodel. The prompt is passed as a user message to the model, along with a system message to set the model’s behavior.get_response函数将提示作为输入,并从gpt-3.5-turbo模型返回响应。提示作为用户消息传递到模型,并连同用于设置模型行为的系统消息。Two prompt variants are stored in the

test_promptslist.test_prompts列表中存储了两个提示变体。An empty list

responsesis created to store the generated responses, and the variablenum_testsis set to 5.

创建一个空列表responses来存储生成的响应,并将变量num_tests设置为 5。A nested loop is used to generate responses. The outer loop iterates over each prompt, and the inner loop generates

num_tests(five in this case) number of responses per prompt.

嵌套循环用于生成响应。外部循环迭代每个提示,内部循环为每个提示生成num_tests(本例中为 5)个响应。The

enumeratefunction is used to get the index and value of each prompt intest_prompts. This index is then converted to a corresponding uppercase letter (e.g., 0 becomes A, 1 becomes B) to be used as a variant name.enumerate函数用于获取test_prompts中每个提示的索引和值。然后将该索引转换为相应的大写字母(例如,0 变为 A,1 变为 B)以用作变体名称。For each iteration, the

get_responsefunction is called with the current prompt to generate a response from the model.

对于每次迭代,都会使用当前提示调用get_response函数,以从模型生成响应。A dictionary is created with the variant name, the prompt, and the model’s response, and this dictionary is appended to the

responseslist.

使用变体名称、提示和模型响应创建一个字典,并将该字典附加到responses列表中。

Once all responses have been generated, the

responseslist (which is now a list of dictionaries) is converted into a Pandas DataFrame.

生成所有响应后,responses列表(现在是字典列表)将转换为 Pandas DataFrame。This dataframe is then saved to a CSV file with the Pandas built-in

to_csvfunction, making the file responses.csv withindex=Falseso as to not write row indices.

然后使用 Pandas 内置to_csv函数将该数据帧保存到 CSV 文件中,使文件response.csv 带有index=False以便不写入行索引。Finally, the dataframe is printed to the console.

最后,数据帧被打印到控制台。

Having these responses in a spreadsheet is already useful, because you can see right away even in the printed response that prompt_A (zero-shot) in the first five rows is giving us a numbered list, whereas prompt_B (few-shot) in the last five rows tends to output the desired format of a comma-separated inline list. The next step is to give a rating on each of the responses, which is best done blind and randomized to avoid favoring one prompt over another.

在电子表格中包含这些响应已经很有用,因为即使在打印的响应中,您也可以立即看到前五行中的 prompt_A (零样本)为我们提供了一个编号列表,而 prompt_B (few-shot) 倾向于输出以逗号分隔的内联列表的所需格式。下一步是对每个答案进行评分,最好是盲目和随机进行评分,以避免偏向某一提示而不是另一提示。

Input: 输入:

import

The output is shown in Figure 1-12:

输出如图 1-12 所示:

Figure 1-12. Thumbs-up/thumbs-down rating system

图 1-12。赞成/反对评级系统

If you run this in a Jupyter Notebook, a widget displays each AI response, with a thumbs-up or thumbs-down button (see Figure 1-12) This provides a simple interface for quickly labeling responses, with minimal overhead. If you wish to do this outside of a Jupyter Notebook, you could change the thumbs-up and thumbs-down emojis for Y and N, and implement a loop using the built-in input() function, as a text-only replacement for iPyWidgets.

如果您在 Jupyter Notebook 中运行此程序,小部件会显示每个 AI 响应,并带有“赞成”或“反对”按钮(见图 1-12)。这提供了一个简单的界面,可以以最小的开销快速标记响应。如果您希望在 Jupyter Notebook 之外执行此操作,您可以更改 Y 和 N 的拇指向上和拇指向下表情符号,并使用内置 input() 函数以文本形式实现循环- 仅替代 iPyWidgets。

Once you’ve finished labeling the responses, you get the output, which shows you how each prompt performs.

完成对响应的标记后,您将获得输出,其中显示每个提示的执行情况。

Output: 输出:

A/B testing completed. Here’s the results: variant count score 0 A 5 0.2 1 B 5 0.6

The dataframe was shuffled at random, and each response was labeled blind (without seeing the prompt), so you get an accurate picture of how often each prompt performed. Here is the step-by-step explanation:

数据框被随机打乱,每个响应都被标记为盲(看不到提示),因此您可以准确了解每个提示执行的频率。以下是分步说明:

Three modules are imported:

ipywidgets,IPython.display, andpandas.ipywidgetscontains interactive HTML widgets for Jupyter Notebooks and the IPython kernel.IPython.displayprovides classes for displaying various types of output like images, sound, displaying HTML, etc. Pandas is a powerful data manipulation library.

导入三个模块:ipywidgets、IPython.display和pandas。ipywidgets包含 Jupyter Notebooks 和 IPython 内核的交互式 HTML 小部件。IPython.display提供了用于显示各种类型输出的类,如图像、声音、显示 HTML 等。Pandas 是一个强大的数据操作库。The pandas library is used to read in the CSV file responses.csv, which contains the responses you want to test. This creates a Pandas DataFrame called

df.

pandas 库用于读取 CSV 文件response.csv,其中包含您要测试的响应。这将创建一个名为df的 Pandas DataFrame。dfis shuffled using thesample()function withfrac=1, which means it uses all the rows. Thereset_index(drop=True)is used to reset the indices to the standard 0, 1, 2, …, n index.df使用sample()函数与frac=1进行混洗,这意味着它使用所有行。reset_index(drop=True)用于将索引重置为标准 0, 1, 2, …, n 索引。The script defines

response_indexas 0. This is used to track which response from the dataframe the user is currently viewing.

该脚本将response_index定义为 0。这用于跟踪用户当前正在查看的数据帧的响应。A new column

feedbackis added to the dataframedfwith the data type asstror string.

新列feedback将添加到数据框df中,数据类型为str或字符串。Next, the script defines a function

on_button_clicked(b), which will execute whenever one of the two buttons in the interface is clicked.

接下来,该脚本定义一个函数on_button_clicked(b),只要单击界面中的两个按钮之一,该函数就会执行。The function first checks the

descriptionof the button clicked was the thumbs-up button (\U0001F44D; ), and sets

), and sets user_feedbackas 1, or if it was the thumbs-down button (\U0001F44E ), it sets

), it sets user_feedbackas 0.

该函数首先检查单击的按钮的description是竖起大拇指按钮(\U0001F44D; ),并将

),并将 user_feedback设置为1,或者如果是拇指向下按钮 (\U0001F44E ),则将

),则将 user_feedback设置为 0。Then it updates the

feedbackcolumn of the dataframe at the currentresponse_indexwithuser_feedback.

然后它用user_feedback更新当前response_index处数据帧的feedback列。After that, it increments

response_indexto move to the next response.

之后,它会递增response_index以移至下一个响应。If

response_indexis still less than the total number of responses (i.e., the length of the dataframe), it calls the functionupdate_response().

如果response_index仍然小于响应总数(即数据帧的长度),则调用函数update_response()。If there are no more responses, it saves the dataframe to a new CSV file results.csv, then prints a message, and also prints a summary of the results by variant, showing the count of feedback received and the average score (mean) for each variant.

如果没有更多响应,它将数据帧保存到新的 CSV 文件 results.csv,然后打印一条消息,并按变体打印结果摘要,显示收到的反馈计数和平均分数(平均值)每个变体。

The function

update_response()fetches the next response from the dataframe, wraps it in paragraph HTML tags (if it’s not null), updates theresponsewidget to display the new response, and updates thecount_labelwidget to reflect the current response number and total number of responses.

函数update_response()从数据帧中获取下一个响应,将其包装在段落 HTML 标记中(如果它不为空),更新response小部件以显示新响应,并更新 < b2> 小部件反映当前响应数和响应总数。Two widgets,

response(an HTML widget) andcount_label(a Label widget), are instantiated. Theupdate_response()function is then called to initialize these widgets with the first response and the appropriate label.

两个小部件response(HTML 小部件)和count_label(Label 小部件)被实例化。然后调用update_response()函数以使用第一个响应和适当的标签来初始化这些小部件。Two more widgets,

thumbs_up_buttonandthumbs_down_button(both Button widgets), are created with thumbs-up and thumbs-down emoji as their descriptions, respectively. Both buttons are configured to call theon_button_clicked()function when clicked.

另外两个小部件thumbs_up_button和thumbs_down_button(都是按钮小部件)是分别使用拇指向上和拇指向下表情符号作为其描述来创建的。这两个按钮都配置为在单击时调用on_button_clicked()函数。The two buttons are grouped into a horizontal box (

button_box) using theHBoxfunction.

使用HBox函数将两个按钮分组到一个水平框 (button_box) 中。Finally, the

response,button_box, andcount_labelwidgets are displayed to the user using thedisplay()function from theIPython.displaymodule.

最后,使用IPython.display、button_box和count_label小部件。 b4> 模块。

A simple rating system such as this one can be useful in judging prompt quality and encountering edge cases. Usually in less than 10 test runs of a prompt you uncover a deviation, which you otherwise wouldn’t have caught until you started using it in production. The downside is that it can get tedious rating lots of responses manually, and your ratings might not represent the preferences of your intended audience. However, even small numbers of tests can reveal large differences between two prompting strategies and reveal nonobvious issues before reaching production.

像这样的简单评级系统可用于判断即时质量和遇到边缘情况。通常,在提示的不到 10 次测试运行中,您就会发现一个偏差,否则您将无法发现该偏差,直到您开始在生产中使用它为止。缺点是,它可能会手动对大量回复进行繁琐的评级,并且您的评级可能不代表目标受众的偏好。然而,即使少量的测试也可以揭示两种提示策略之间的巨大差异,并在投入生产之前揭示不明显的问题。

Iterating on and testing prompts can lead to radical decreases in the length of the prompt and therefore the cost and latency of your system. If you can find another prompt that performs equally as well (or better) but uses a shorter prompt, you can afford to scale up your operation considerably. Often you’ll find in this process that many elements of a complex prompt are completely superfluous, or even counterproductive.

迭代和测试提示可以大大缩短提示的长度,从而降低系统的成本和延迟。如果您能找到另一个性能同样好(或更好)但使用更短提示的提示,您就可以大幅扩展您的操作。通常,您会发现在此过程中,复杂提示的许多元素完全是多余的,甚至适得其反。

The thumbs-up or other manually labeled indicators of quality don’t have to be the only judging criteria. Human evaluation is generally considered to be the most accurate form of feedback. However, it can be tedious and costly to rate many samples manually. In many cases, as in math or classification use cases, it may be possible to establish ground truth (reference answers to test cases) to programmatically rate the results, allowing you to scale up considerably your testing and monitoring efforts. The following is not an exhaustive list because there are many motivations for evaluating your prompt programmatically:

竖起大拇指或其他手动标记的质量指标不一定是唯一的评判标准。人类评估通常被认为是最准确的反馈形式。然而,手动对许多样本进行评级可能是乏味且昂贵的。在许多情况下,如在数学或分类用例中,可以建立基本事实(测试用例的参考答案)以编程方式对结果进行评级,从而允许您大幅扩展测试和监控工作。以下并不是详尽的列表,因为以编程方式评估提示的动机有很多:

Cost 成本

Prompts that use a lot of tokens, or work only with more expensive models, might be impractical for production use.

使用大量令牌或仅适用于更昂贵的模型的提示对于生产用途可能不切实际。

Latency 潜伏

Equally the more tokens there are, or the larger the model required, the longer it takes to complete a task, which can harm user experience.

同样,代币越多,或者所需的模型越大,完成任务所需的时间就越长,这可能会损害用户体验。

Calls 通话

Many AI systems require multiple calls in a loop to complete a task, which can seriously slow down the process.

许多人工智能系统需要循环多次调用才能完成任务,这会严重减慢进程。

Performance 表现

Implement some form of external feedback system, for example a physics engine or other model for predicting real-world results.

实施某种形式的外部反馈系统,例如物理引擎或其他用于预测现实世界结果的模型。

Classification 分类

Determine how often a prompt correctly labels given text, using another AI model or rules-based labeling.

使用其他 AI 模型或基于规则的标签确定提示正确标记给定文本的频率。

Reasoning 推理

Work out which instances the AI fails to apply logical reasoning or gets the math wrong versus reference cases.

与参考案例相比,找出人工智能未能应用逻辑推理或数学错误的实例。

Hallucinations 幻觉

See how frequently you encouner hallucinations, as measured by invention of new terms not included in the prompt’s context.

看看您遇到幻觉的频率,通过发明未包含在提示上下文中的新术语来衡量。

Safety 安全

Flag any scenarios where the system might return unsafe or undesirable results using a safety filter or detection system.

使用安全过滤器或检测系统标记系统可能返回不安全或不良结果的任何场景。

Refusals 拒绝

Find out how often the system incorrectly refuses to fulfill a reasonable user request by flagging known refusal language.

通过标记已知的拒绝语言,了解系统错误地拒绝满足合理用户请求的频率。

Adversarial 对抗性的

Make the prompt robust against known prompt injection attacks that can get the model to run undesirable prompts instead of what you programmed.

使提示能够抵御已知的提示注入攻击,这些攻击可以使模型运行不需要的提示而不是您编程的提示。

Similarity 相似

Use shared words and phrases (BLEU or ROGUE) or vector distance (explained in Chapter 5) to measure similarity between generated and reference text.

使用共享单词和短语(BLEU 或 ROGUE)或矢量距离(第 5 章中说明)来衡量生成文本和参考文本之间的相似性。

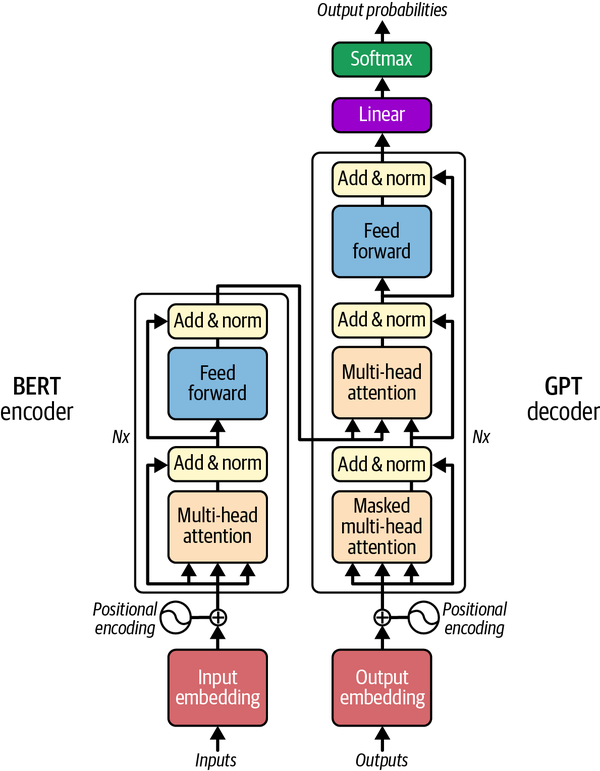

Once you start rating which examples were good, you can more easily update the examples used in your prompt as a way to continuously make your system smarter over time. The data from this feedback can also feed into examples for fine-tuning, which starts to beat prompt engineering once you can supply a few thousand examples, as shown in Figure 1-13.

一旦您开始评估哪些示例不错,您就可以更轻松地更新提示中使用的示例,从而随着时间的推移不断使您的系统变得更加智能。来自此反馈的数据还可以输入到示例中进行微调,一旦您可以提供几千个示例,微调就开始胜过即时工程,如图 1-13 所示。

Figure 1-13. How many data points is a prompt worth?

图 1-13。一个提示值多少个数据点?

Graduating from thumbs-up or thumbs-down, you can implement a 3-, 5-, or 10-point rating system to get more fine-grained feedback on the quality of your prompts. It’s also possible to determine aggregate relative performance through comparing responses side by side, rather than looking at responses one at a time. From this you can construct a fair across-model comparison using an Elo rating, as is popular in chess and used in the Chatbot Arena by lmsys.org.

从赞成或反对毕业,您可以实施 3 分、5 分或 10 分评级系统,以获得有关提示质量的更细粒度的反馈。还可以通过并排比较响应来确定总体相对性能,而不是一次查看一个响应。由此,您可以使用 Elo 评级构建公平的跨模型比较,这在国际象棋中很流行,并由 lmsys.org 在 Chatbot Arena 中使用。

For image generation, evaluation usually takes the form of permutation prompting, where you input multiple directions or formats and generate an image for each combination. Images can than be scanned or later arranged in a grid to show the effect that different elements of the prompt can have on the final image.

对于图像生成,评估通常采用排列提示的形式,您输入多个方向或格式,并为每个组合生成图像。然后可以扫描图像或稍后将图像排列在网格中,以显示提示的不同元素对最终图像的影响。

Input: 输入:

{stock photo, oil painting, illustration} of business meeting of {four, eight} people watching on white MacBook on top of glass-top table

In Midjourney this would be compiled into six different prompts, one for every combination of the three formats (stock photo, oil painting, illustration) and two numbers of people (four, eight).

在《中途旅程》中,这将被编译成六种不同的提示,一种对应三种格式(库存照片、油画、插图)和两种人数(四人、八人)的每一种组合。

Input: 输入:

stock photo of business meeting of four people watching on white MacBook on top of glass-top table

stock photo of business meeting of eight people watching on white MacBook on top of glass-top table

oil painting of business meeting of four people watching on white MacBook on top of glass-top table

oil painting of business meeting of eight people watching on white MacBook on top of glass-top table

illustration of business meeting of four people watching on white MacBook on top of glass-top table

illustration of business meeting of eight people watching on white MacBook on top of glass-top table

Each prompt generates its own four images as usual, which makes the output a little harder to see. We have selected one from each prompt to upscale and then put them together in a grid, shown as Figure 1-14. You’ll notice that the model doesn’t always get the correct number of people (generative AI models are surprisingly bad at math), but it has correctly inferred the general intention by adding more people to the photos on the right than the left.

每个提示都会像往常一样生成自己的四个图像,这使得输出有点难以查看。我们从每个提示中选择一个进行升级,然后将它们放在一个网格中,如图 1-14 所示。你会注意到,该模型并不总是能得到正确的人数(生成式 AI 模型的数学出奇地糟糕),但它通过在右侧照片中添加比左侧更多的人来正确推断出总体意图。

Figure 1-14 shows the output.

图 1-14 显示了输出。

Figure 1-14. Prompt permutations grid

图 1-14。提示排列网格

With models that have APIs like Stable Diffusion, you can more easily manipulate the photos and display them in a grid format for easy scanning. You can also manipulate the random seed of the image to fix a style in place for maximum reproducibility. With image classifiers it may also be possible to programmatically rate images based on their safe content, or if they contain certain elements associated with success or failure.

借助具有稳定扩散等 API 的模型,您可以更轻松地操作照片并以网格格式显示它们,以便于扫描。您还可以操纵图像的随机种子来固定样式,以获得最大的可重复性。使用图像分类器,还可以根据图像的安全内容,或者图像是否包含与成功或失败相关的某些元素,以编程方式对图像进行评级。

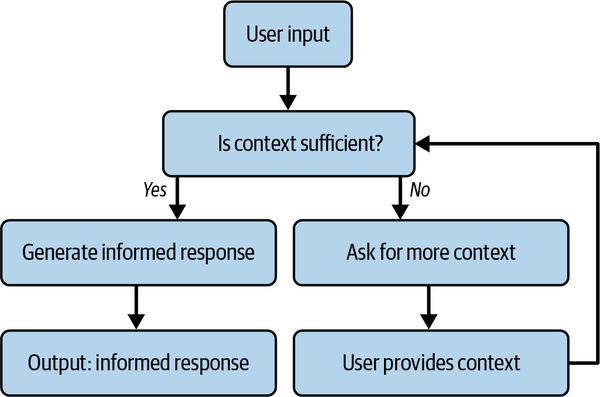

5. Divide Labor 5. 分工

As you build out your prompt, you start to get to the point where you’re asking a lot in a single call to the AI. When prompts get longer and more convoluted, you may find the responses get less deterministic, and hallucinations or anomalies increase. Even if you manage to arrive at a reliable prompt for your task, that task is likely just one of a number of interrelated tasks you need to do your job. It’s natural to start exploring how many other of these tasks could be done by AI and how you might string them together.

当你构建提示时,你开始在一次对人工智能的调用中提出很多问题。当提示变得更长、更复杂时,您可能会发现响应的确定性降低,并且幻觉或异常现象会增加。即使您设法为您的任务找到可靠的提示,该任务也可能只是您完成工作所需的众多相互关联的任务之一。我们很自然地会开始探索人工智能可以完成多少其他任务以及如何将它们串联起来。

One of the core principles of engineering is to use task decomposition to break problems down into their component parts, so you can more easily solve each individual problem and then reaggregate the results. Breaking your AI work into multiple calls that are chained together can help you accomplish more complex tasks, as well as provide more visibility into what part of the chain is failing.

工程的核心原则之一是使用任务分解将问题分解为各个组成部分,这样您就可以更轻松地解决每个单独的问题,然后重新聚合结果。将您的 AI 工作分解为多个链接在一起的调用可以帮助您完成更复杂的任务,并更清楚地了解该链的哪个部分发生了故障。

There are lots of factors that go into product naming, and an important task is naively outsourced to the AI with no visibility into how it’s weighing the importance of these factors (if at all). The way our current system works, we’re getting a list of names, but all names are displayed with equal importance with no further context for helping us decide. Fortunately AI tools are capable of self-evaluation; if we add a second step to our task, we can automatically check for nondesirable outputs.

产品命名涉及很多因素,而一项重要任务被天真地外包给人工智能,而无法了解它如何权衡这些因素的重要性(如果有的话)。按照我们当前系统的工作方式,我们会得到一个名称列表,但所有名称都以同等重要性显示,没有进一步的上下文来帮助我们做出决定。幸运的是,人工智能工具能够自我评估;如果我们在任务中添加第二步,我们可以自动检查不需要的输出。

Input: 输入:

Rate this list of product names for a pair of shoes that can fit any foot size. The rating should be out of 10, inline next to the product name:

UniFit SoleStrides FlexiSize All-Fit Shoes OmniFit FootFlex … AnyStep AdaptiWalkers FlexiRange UniversalSteps PerfectGlide MultiFit Shoes

Output: 输出:

UniFit SoleStrides: 7/10 FlexiSize All-Fit Shoes: 8/10 OmniFit FootFlex: 7/10 … AnyStep AdaptiWalkers: 7/10 FlexiRange UniversalSteps: 7.5/10 PerfectGlide MultiFit Shoes: 9/10

This ranking is based on clarity, memorability, and how well the name communicates the unique selling point of the product (that they can fit any foot size). Remember, these ratings are subjective and the ultimate success of a product name also depends on the target market, branding, and marketing efforts.